What If Our Homes Can Be That Smart Too?

I often reminisce about this question posed 8 years ago during my time at Fibaro, which was in this video, Everything Is Connected. The video, narrated by my then 8-year-old son, envisioned a home smart enough to expand our senses, enabling us to see the music, touch the weather, or hear the energy around us. It hinted at a future where intelligent devices digitize our physical space, making our homes safer and more enjoyable.

Fast forward to today, and we're at the cusp of making this vision a reality, courtesy of a convergence of technologies. Generative AI, powered by transformer models, is paving the path for this transformation. It's not just about creating poems or images, translating data into natural language and vice versa; it's about redefining how we interact with digital products, computers, and even our smartphones.

Platform Shift: A New Dawn of Interaction

The term 'platform shift' might evoke a sense of complexity, yet it embodies a straightforward, albeit revolutionary, concept when unraveled. It’s about transitioning to a framework that spawns innovations unimaginable in the preceding paradigm. It's about AI becoming the new Operating System.

Take the smartphone evolution for instance. Initially, smartphones were glorified tools for texting, browsing, and emailing. However, as the platform matured, it birthed an expansive world of applications that redefined our daily interactions. Today, our smartphones are our navigators, fitness trackers, and even our virtual shopping assistants - capabilities that were barely conceivable during the early days of smartphones.

Now, let's transpose this narrative to the realm of Generative AI and Large Language Models (LLMs). Initially, the default interface of LLMs was a text-based user interface, akin to the SMS app on our early smartphones. However, the horizon broadens as we stand on the brink of a platform shift. The multimodal interaction, where voice, image, and text converge, is in its early days today. And yet, even as we start to understand how this multimodal interaction will shape new capabilities and UX, I am seeing early signs of LLMs being able to interpret sound, video, and other types of time-series sensors.

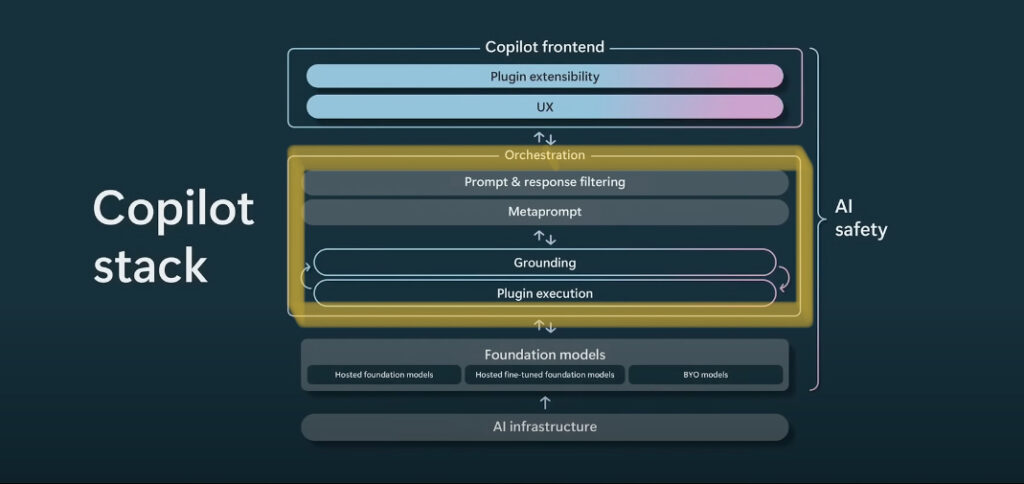

These different types of sensor inputs being fused, and AI being able to understand it, to understand a person’s natural language inquiry, without any predefined training, is the contextual awareness that smart homes have been missing to date. Here is an example of this Orchestration:

Copilot Stack - Microsoft Build2023

Just as apps like Uber or Instagram were unimaginable before the smartphone platform matured, the applications that will emerge from the multimodal AI platform are beyond our current understanding. When Operating systems can do input and output across different modalities, interpret code, create code on the fly, access the internet and information, and then render things in an in-the-moment visual or audio way, the way we interact with digital products, and the physical products that get digitized fundamentally changes.

The ability of AI to interpret and interact across these modalities is akin to the app revolution of smartphones, or even bigger, I believe. Tomorrow, multimodal AI will redefine how we interact with not just our gadgets but the world around us, transcending the boundaries between the digital and physical realms. The interfaces for these systems will flip the “Form follows function” design principle.

All this and I haven’t even touched on how these new emerging AI capabilities will be infused into AR and VR glasses. Next year at this time I predict we will be talking about the amazing use cases we are seeing in Apple Vision Pro.

Edge AI: The Guardian of Privacy

As we usher in the era of multimodal AI, safeguarding privacy is paramount. On the hardware side, Qualcomm’s vision of a hybrid AI architecture underscores the significance of on-device AI. This model distributes AI workloads between cloud and edge devices, such as smartphones and IoT devices, orchestrating a harmonized operation that is powerful, efficient, and privacy-centric. By keeping a chunk of AI processing on-device, we contain sensitive data within our homes or on devices, far from the potential prying eyes in the cloud. This is akin to Apple’s approach of on-device AI that can transcribe your voice to inquire about your health data, all without the data leaving your device.

Now imagine you have your own AI that can understand the context around you and your health data from your wearable. With Edge AI, sharing information with a private AI can bring personalization to a new level. Today, I run a 7-billion-parameter LLM on my iPhone 15 Pro without an internet connection. It’s not hard to imagine the benefits of smart home platforms having some form of EdgeAI within the home. See Samsung’s Home AI Edge Hub announcements at SDC23

Call to Action: Dare to Solve the Impossible

The convergence of generative AI, multimodal interaction, and edge AI paints a picture of endless possibilities. It's a call for innovators and thinkers to venture beyond the superficial and tackle the hard problems that once seemed insurmountable.

Entrepreneurs and companies poised to pioneer in this new era will not merely adapt; they will shape the narrative. It's about envisioning and realizing solutions that redefine the norms, solutions that stand as testaments to human ingenuity in the face of complexity.

As we embark on this exhilarating journey, the beckoning of the new smart home, the melding of digital and physical realms, and the promise of preserving our privacy let’s dare to think hard, think new, and think forward. The narrative of tomorrow hinges on the audacious actions we take today. Are you ready to take the leap?

# # #

Rich Bira, SVP of Product Innovation, is actively engaged in the AI space and a committee member of Consumer Technology Association’s AI In Health Care and General Principles of AI/ML Standards Committees.